(“TL;DR”? Don’t bother! ;))

Since the early 2000’s I have been using BIND to provide name service for the LANs that I set up and/or maintain. Some time ago it became necessary to find a solution for a use case where an internal name server needed to be able to override and/or add some subdomains to an externally authoritative zones, while resolving the rest [of that zone] from its authoritative source (and, of course, provide internal resolution for the LAN’s internal zone(s)).

The only resolver that provides partial (“transparent”) override of an external zone is Unbound. However, Unbound is only an iterative resolver, and while it provides the option to inject “local zone data” which behaves as if it were authoritative (for all practical purposes), it is not such. Unbound is very efficient in what it does, and it’s secure, but to provide a fully functional name server it needs to be coupled with an authoritative server. I spent some time trying out NSD, considering whether I could adopt it as the auth zone server, especially since it’s also made by NLnet Labs like Unbound. HOwever, after some testing I opted to keep BIND as the authoritative server, mainly because in the LAN use the goal was to select the most versatile components – features trumped [possible] security benefits. In addition to my overall familiarity with BIND, I missed the ACLs, and the $GENERATE statement, both which modern BIND offers.

The next steps were to make BIND and Unbound to play well together, and to figure out how Unbound could be mirrored since it doesn’t natively include IXFR style zone transfers, or in fact any type of replication. If I were to use Unbound to partially override externally authoritative zones, I didn’t want to have to manually keep the overriding zone data in sync on multiple servers.

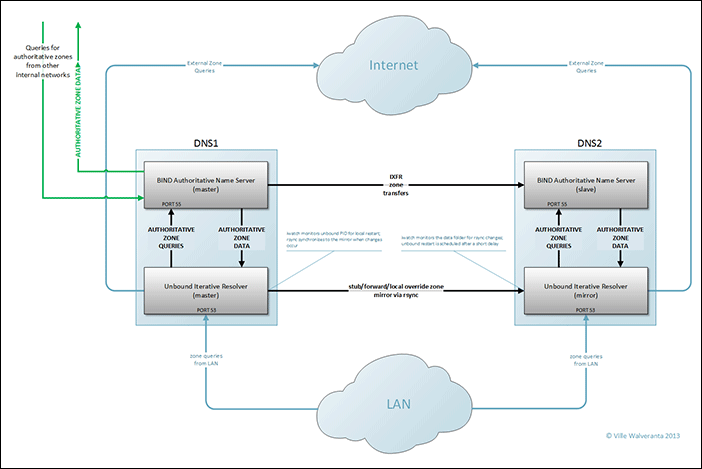

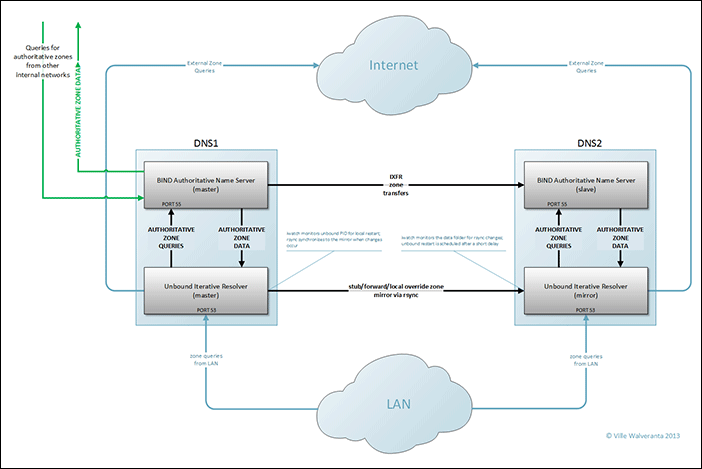

Below is a diagram of the complete system. For clarity’s sake only one mirror (“DNS2”) is illustrated; adding more mirrors is a trivial task.

(click the image for the full size version; a PDF version is also available)

Once I had set up BIND master and BIND slave, I set up the standard IXFR zone transfer between them, and tested that it works. Since it’s outside of the scope of this article, I let you research/figure it out on your own (if you’re not already familiar with the process). Once I got it working I set BIND to listen on a LAN address on a non-standard port 55. This is because Unbound that will be serving the DNS queries from the LAN will be listening on the default DNS port 53. BIND’s port 55 accepts queries from the Unbound server (in this case, the same server), and also from other LAN segments whose respective resolvers might forward queries for the zones this BIND installation is authoritative for. In some cases BIND also provides in-addr.arpa (reverse) resolution externally (access to internal zone resolution or recursion is prohibited by an ACL), and in such cases the firewall NAT translates the external port 53 to port 55 on BIND.

With Unbound configured I toggled its default setting with do-not-query-localhost: no since BIND instance resides on the same server. Without that directive Unbound won’t query authoritative zones on BIND at 127.0.0.1:55. Now stub zones on Unbound are able to complete queries for zones for whom BIND is the authoritative server. The Unbound stub entry looks like this:

#

# STUB ZONE: mylocalzone.net

#

private-domain: "mylocalzone.net"

stub-zone:

name: "mylocalzone.net"

stub-addr: 127.0.0.1@55

##[terminator]##

server:

You will notice the “##[terminator##” segment in the end of the stub zone file above. Since I like to break out my stub, forward, and “extended” (override) zones into individual config files, the “server:” statement terminates the local-zone segment allowing other content to follow in the unbound.conf from where these are included.

A extended, or “override” zone file (the impetus for this whole excercise), is equally simple:

#

# OVERRIDES FOR EXTERNAL ZONE: cnn.com

#

private-domain: "cnn.com"

local-zone: "cnn.com" transparent

local-data: "mysubdomain.cnn.com. IN A 10.0.0.5"

##[terminator]##

server:

With the above simple example the zone “cnn.com” will resolve normally along with its any an all defined subdomains (www.cnn.com, etc.), except for “mysubdomain.cnn.com” which is now valid on your LAN with an IP 10.0.0.1. While just an example, such overrides tend to be more useful when applied, for example, to company domains where you need to add subdomains on the internal LAN for intranet, development, etc. while relying on the default external authoritative source for the remainder of the zone.

Now for the interesting part: Implementing the Unbound mirroring. Since Unbound doesn’t provide IXFR or other methods for zone transfer/replication, I have created a handful of shell scripts that take care of the task. At the time of writing of this I’m running this setup on Ubuntu 12.04LTS servers and hence the following prerequisite utilities are available: iwatch, lockfiles-progs, and rsync.

On the master server service “unbound-push-service” is installed. When changes occur (unbound is restarted), the service detects the change and executes another script (trigger-unbound-rsync) which with the help of rsync synchronizes Unbound zone data to the mirror(s). On the mirror(s) a service “unbound-restart-service” is installed. The service utilizes iWatch to detect content changes in the Unbound zone data folder. When changes are detected, “trigger-unbound-restart” is triggered. After a wait for a few seconds (to ensure that the transfer is complete), the script command “unbound-trigger-command” is run and Unbound is restarted, hence making the updated zone data live.

For the above to work the prerequisite utilities must be installed, a rsync (ssh) key needs to be configured between the master and the mirror(s), the monitoring services must be installed on source and the mirror, and the Unbound zone files must be separated to a folder of their own. I’ll step you through the process below with examples and necessary scripts along the way.

First, make sure the prerequisite utilities are installed on both the master and the mirror (note: all tasks below assume you’re running as a root; if not, add sudo as needed):

apt-get install iwatch rsync lockfile-progs

Copy the following utility scripts to the master (I use /opt/unbound-push/ as the folder, but you can choose a location that suits you best):

unbound-push-service:

#!/bin/bash

# unbound-push daemon

# chkconfig: 345 20 80

# description: unbound-push daemon

# processname: unbound-push

###########################

# prereqs: iwatch

DAEMON_PATH="/usr/bin"

DAEMON=iwatch

DAEMONOPTS="-c /opt/unbound-push/trigger-unbound-rsync -e close_write /var/run/unbound.pid"

NAME=unbound-push

DESC="Pushes config changes to DNS2 on Unbound restart"

PIDFILE=/var/run/$NAME.pid

SCRIPTNAME=/etc/init.d/$NAME

case "$1" in

start)

printf "%-50s" "Starting $NAME..."

cd $DAEMON_PATH

PID=`$DAEMON $DAEMONOPTS > /dev/null 2>&1 & echo $!`

#echo "Saving PID" $PID " to " $PIDFILE

if [ -z $PID ]; then

printf "%sn" "Fail"

else

echo $PID > $PIDFILE

printf "%sn" "Ok"

fi

;;

status)

printf "%-50s" "Checking $NAME..."

if [ -f $PIDFILE ]; then

PID=`cat $PIDFILE`

if [ -z "`ps axf | grep ${PID} | grep -v grep`" ]; then

printf "%sn" "Process dead but pidfile exists"

else

echo "Running"

fi

else

printf "%sn" "Service not running"

fi

;;

stop)

printf "%-50s" "Stopping $NAME"

PID=`cat $PIDFILE`

cd $DAEMON_PATH

if [ -f $PIDFILE ]; then

kill -HUP $PID

printf "%sn" "Ok"

rm -f $PIDFILE

else

printf "%sn" "pidfile not found"

fi

;;

restart)

$0 stop

$0 start

;;

*)

echo "Usage: $0 {status|start|stop|restart}"

exit 1

esac

trigger-unbound-rsync:

$LOCKFILE

/usr/bin/rsync -vaz --delete -e "ssh -i /root/.ssh/dns-push.id_rsa" /etc/unbound/zonedata /etc/unbound/conf.d unbound@mirrordns.mylocalzone.net:/etc/unbound/ &> /opt/unbound-push/push.log

kill -9 $(cat ${LOCKFILE})

/usr/bin/lockfile-remove --quiet --lock-name $LOCKFILE

fi

exit 0

Copy the following utility scripts to the mirror (I use /opt/unbound-trigger/ as the folder, but you can choose a location that suits you best):

unbound-restart-service:

/dev/null 2>&1 & echo $!`

#echo "Saving PID" $PID " to " $PIDFILE

if [ -z $PID ]; then

printf "%sn" "Fail"

else

echo $PID > $PIDFILE

printf "%sn" "Ok"

fi

;;

status)

printf "%-50s" "Checking $NAME..."

if [ -f $PIDFILE ]; then

PID=`cat $PIDFILE`

if [ -z "`ps axf | grep ${PID} | grep -v grep`" ]; then

printf "%sn" "Process dead but pidfile exists"

else

echo "Running"

fi

else

printf "%sn" "Service not running"

fi

;;

stop)

printf "%-50s" "Stopping $NAME"

PID=`cat $PIDFILE`

cd $DAEMON_PATH

if [ -f $PIDFILE ]; then

kill -HUP $PID

printf "%sn" "Ok"

rm -f $PIDFILE

else

printf "%sn" "pidfile not found"

fi

;;

restart)

$0 stop

$0 start

;;

*)

echo "Usage: $0 {status|start|stop|restart}"

exit 1

esac

trigger-unbound-restart:

$LOCKFILE

/usr/bin/nohup /opt/unbound-trigger/unbound-trigger-command $DELAY $LOCKFILE > /dev/null 2>&1 &

fi

exit 0

unbound-trigger-command:

So far so good. Now that all the prerequirements are in place; let’s do some configuration. The following assumes the above mentioned locations (/opt/unbound-push on the master and /opt/unbound-trigger on the mirror) for the script/service files.

On the Master:

- Symlink /opt/unbound-push/unbound-push-service from /etc/init.d:

ln -s /opt/unbound-push/unbound-push-service /etc/init.d

- Install the service:

update-rc.d unbound-push-service defaults

- Create an RSA key specifically to push content via rsync to the mirror server:

ssh-keygen -f /root/.ssh/dns-unbound-sync.id_rsa

** Choose no password to protect the private key! **

- Copy the public key to the mirror server via scp

- Add to (or create if the file doesn’t exist) /root/.ssh/config:

Host mirrordns.mylocalzone.net

HostName mirrordns.mylocalzone.net

User unbound

IdentityFile ~/.ssh/dns-unbound-sync.id_rsa

StrictHostKeyChecking no

- Double-check rsync configuration in /opt/unbound-push/trigger-unbound-rsync for the key file name, target IP (IP may be preferable over a domain name since this is part of the DNS fabric; this needs to work regardless even if resolution is not [yet] working), etc.

And on the mirror:

- Symlink /opt/unbound-trigger/unbound-restart service from /etc/init.d:

ln -s /opt/unbound-trigger/unbound-restart-service /etc/init.d

- Install the service:

update-rc.d unbound-restart-service defaults

- Modify the unbound user’s shell with vipw: /bin/false -> /bin/bash

- Make sure /etc/unbound and its contents are owned by unbound.unbound:

chown -R unbound.unbound /etc/unbound

- Create “.ssh” directory for unbound user, make sure it is owned by unbound.unbound, and that its permissions are set to 700:

mkdir /var/lib/unbound/.ssh

chown unbound.unbound /var/lib/unbound/.ssh

chmod 700 /var/lib/unbound/.ssh

- Move the public RSA key for “unbound” user from the path where you transferred it to from the Master server above (see “On the Master, #4”), make sure it’s owned by unbound.unbound, and set its permissions to 600. The target file name, “authorized_keys2” is significant.

mv /some/path/to/the/public/rsa_key /var/lib/unbound/.ssh/authorized_keys2

chown unbound.unbound /var/lib/unbound/.ssh/authorized_keys2

chmod 600 /var/lib/unbound/.ssh/authorized_keys2

- If /etc/ssh/sshd_config limits which users can log in, the transfer user (which is “unbound” if you followed the above steps) must be allowed to log in.

Mirroring configuration is now complete! Since the scripts above monitor specific directory structure under /etc/unbound, I should quickly review it before we proceed to start the services and test the zone mirroring.

As I mentioned earlier, I have local data (stub, forward, and extended zone) broken into separate files. The structure under /etc/unbound looks like this:

/etc/unbound/ – main configuration

/etc/unbound/conf.d – user configuration (local data includes, access control list); monitored and synced to mirror

/etc/unbound/zonedata – local data files; monitored and synced to mirror

Here’s an example unbound.conf:

# Unbound configuration file

#

# See the unbound.conf(5) man page.

#

# See /usr/share/doc/unbound/examples/unbound.conf

# for a commented reference config file, or download

# a free eBook "Alternative DNS Servers" from

# http://jpmens.net/2010/10/29/alternative-dns-servers-the-book-as-pdf/

# that has a good chapter on Unbound.

server:

# The following line will configure unbound to perform cryptographic

# DNSSEC validation using the root trust anchor.

auto-trust-anchor-file: "/var/lib/unbound/root.key"

interface: 10.0.0.50

interface: 127.0.0.1

port: 53

# Outgoing-interface is masq'ed to the external IP at the firewall/gateway.

outgoing-interface: 10.0.0.50

directory: "/etc/unbound"

chroot: ""

username: "unbound"

# include access control

include: /etc/unbound/conf.d/access-control.conf

# The authoritative server (BIND) is on the localhost so toggle the default..

do-not-query-localhost: no

do-ip6: no

pidfile: "/var/run/unbound.pid"

root-hints: "/etc/unbound/root.hints"

module-config: "iterator"

# validator has been disabled in module-config above by not including it;

# queries FAIL if it's enabled and DNSSEC fails!

identity: "resolver.mypubliczone.net"

hide-version: yes

verbosity: 2

use-syslog: yes

logfile: "/var/log/unbound/unbound.log"

log-time-ascii: yes

log-queries: yes

# include zone data

include: "/etc/unbound/conf.d/zones.conf"

forward-zone:

name: "."

forward-addr: 4.2.2.1

forward-addr: 4.2.2.2

forward-addr: 4.2.2.3

forward-addr: 4.2.2.4

forward-addr: 4.2.2.5

forward-addr: 4.2.2.6

forward-addr: 8.8.8.8

forward-addr: 8.8.4.4

The above configuration includes couple of files:

/etc/unbound/conf.d/access-control.conf (here you define IPs/networks that are allowed to query/recurse through this Unbound instance):

#

# HOSTS AND NETWORKS THAT ARE ALLOWED TO RESOLVE/RECURSE

#

access-control: 127.0.0.1/32 allow

access-control: 10.0.0.0/24 allow

/etc/unbound/conf.d/zones.conf (here you include the stubs/forwarders/overrides; you could also instead include the files directly from the zonedata folder, but at the time of writing of this Unbound doesn’t yet support inclusion by wild card, though it is coming. I find explicit inclusions safer, though).

#

# DEFINE STUB/FORWARD/OVERRIDE ZONE FILES

#

# stubs to local authoritative zones (resolved by local BIND):

include: "/etc/unbound/zonedata/S_mylocalzone.net"

include: "/etc/unbound/zonedata/S_0.0.10.in-addr.arpa"

# stubs/forwarders for remote authoritative zones:

include: "/etc/unbound/zonedata/S_my.remotezone.com"

# local overrides for remote authoritative zones:

include: "/etc/unbound/zonedata/X_cnn.com"

/etc/unbound/zonedata/S_mylocalzone.net:

#

# STUB ZONE: mylocalzone.net

#

private-domain: "mylocalzone.net"

stub-zone:

name: "mylocalzone.net"

stub-addr: 127.0.0.1@55

##[terminator]##

server:

/etc/unbound/zonedata/S_0.0.10.in-addr.arpa (NOTE: the ‘nodefault’ entries for local-zones are significant!):

#

# STUB ZONE: 0.0.10.in-addr.arpa

#

local-zone: "10.in-addr.arpa" nodefault

local-zone: "0.0.10.in-addr.arpa" nodefault

stub-zone:

name: "0.0.10.in-addr.arpa"

stub-addr: 127.0.0.1@55

##[terminator]##

server:

/etc/unbound/zonedata/S_my.remotezone.com (e.g. a zone from another ‘internal’ network such as another LAN):

#

# STUB ZONE: my.remotezone.com

#

private-domain: "my.remotezone.com"

stub-zone:

name: "my.remotezone.com"

stub-addr: 172.16.0.10@55

##[terminator]##

server:

/etc/unbound/zonedata/X_cnn.com (override remote zone partially):

#

# OVERRIDES FOR EXTERNAL ZONE CNN.COM

#

private-domain: "cnn.com"

local-zone: "cnn.com" transparent

local-data: "mysubdomain.cnn.com. IN A 10.0.0.5"

##[terminator]##

server:

In the zonedata directory I have a reminder to include files that are added there:

********************************************************************

** IF YOU ADD A ZONE FILE HERE, REMEMBER TO ADD THE CORRESPONDING **

** REFERENCE IN /etc/unbound/conf.d/zones.conf **

********************************************************************

.. and a quick template for the file types:

X_ = eXtended zone (a local-zone with "deny", "refuse", "static",

"transparent", "redirect", "nodefault", or "typetransparent"

type). Extended zones are full or partial overrides of zones

whose authority is elsewhere on the Interwebs.

USE EXTENDED ZONES TO OVERRIDE OR ADD TO EXTERNAL ZONES.

S_ = Stub zone; stub zones are dynamic pointers to zones whose

authority lies outside of unbound. Capable of zone tranasfers.

USE STUB ZONES TO REFERENCE COMPANY-INTERNAL ZONES.

F_ = Forward zone; forward zones are static pointers to zones

whose authority lies outside of unbound. Incapable of zone

transfers; forwarder name servers must be manually updated.

USE FORWARD ZONES TO DIRECT QUERIES FOR SPECIFIC REMOTE ZONES

TO SPECIFIC REMOTE NAME SERVERS.

** Edit AUTHORITATIVE LOCAL ZONE DATA in

/etc/bind/master/ on DNS1.mylocalzone.net,

and OVERRIDES FOR EXTERNAL ZONES in

/etc/unbound/zonedata/ on DNS1.mylocalzone.net!

** DNS2.mylocalzone.net is a SLAVE/MIRROR ONLY!!!

Finally, let’s start the monitoring/triggering services and do some testing. On Master execute service unbound-push-service start, and on the slave execute service unbound-restart-service start.

Test SSH connectivity from master to slave. When logged in as root, you should be able to connect to the mirror as the unbound user simply by typing “ssh mirrordns.mylocalzone.net”. Test rsync from master to mirror (build your own rsync command, or use one from trigger-unbound-rsync file.

Once the above tests are completed successfully, test the DNS mirroring by adding an empty file in /etc/unbound/zonefiles on the master, and then restart unbound; now observe: 1) rsync push on the master, 2) initiation of the unbound-trigger-command with 10 second delay on the mirror, and ultimately 3) changing PID of the Unbound service on the mirror as it is automatically restarted, hence bringing the mirrored changes live also on the secondary server.

If it doesn’t appear to be working, logs are your friend. Start by looking at a small transfer log on the master at /opt/unbound-push/push.log, then review ssh/syslogs on both servers, and finally unbound/BIND logs on both systems.

Whoa! That was lots of content! Maybe I should’ve published this as a book? 😀 I hope this proves useful for someone down the line. I have the above configuration running on half a dozen networks, and once set up it has been very peformant and highly stable.